Scaling Pareto-Efficient Decision Making via Offline Multi-Objective RL

Proposing offline MORL dataset and benchmark and offline MORL agents. Published in ICLR 2023.

Introduction

Reinforcement Learning (RL) agents are usually trained and evaluated on a fixed reward function. In this paper, however, we want to train agents with multiple objectives that cannot be simultaneously optimized, which is referred to as Multi-Objective Reinforcement Learning (MORL).

For instance, the two objectives for a MuJoCo Hopper is running faster and jumping higher, which cannot be achieved all at once under a physics simulation engine. More interestingly, notice the objectives are entangled: the Hopper cannot run without leaving the floor (jump), but jumping too high also makes running slower.

D4MORL

In this paper, we aim solving MORL through a purely offline approach while there are no existing open-sourced benchmark or dataset. As a result, we first propose our own Dataset and Benchmark: Data for MORL (D4MORL). For each environment, we have 6 variants as measured by any of 3 preference distributions 2 return distributions.

The preference distribution is measured by the empirical distribution of the samples. Specifically, we use 3 underlying distributions: Uniform, Dirichlet-Wide, and Dirichlet-Narrow which correspond to 3 levels of entropy: High, Medium, and Low. Through this design, we are able to examine whether agents can adapt to unseen and out-of-distribution preferences.

On the other hand, we also design the Expert vs. Amateur distributions measured by trajectories’ return. In short, Experts are sampled from fully-trained behavioral policy while Amateurs are sampled from noisy behavioral policy.

Leveraging the D4MORL dataset and benchmark, we move on to train the Pareto-Efficient Decision Agents (PEDA), which are a family of offline MORL algorithms. Specifically, we build upon return-conditioned offline models including Decision Transformer (Chen et al, 2021) and RvS (Emmons et al, 2021) by adapting three simple yet very effective changes:

1) Concatenating preference as part of the input tokens; 2) Using preference-weighted return-to-go or avg-return-to-go; 3) Use a linear-regression model to find desired rtg from preference. Notice that 2) and 3) together help us find the correct return-to-go for each objective conditioned on the preference. In short, we should avoid naively setting the initial return-to-go as the highest seen value in the dataset, as this value wouldn’t be achievable by our agent under a different preference.

Experiments

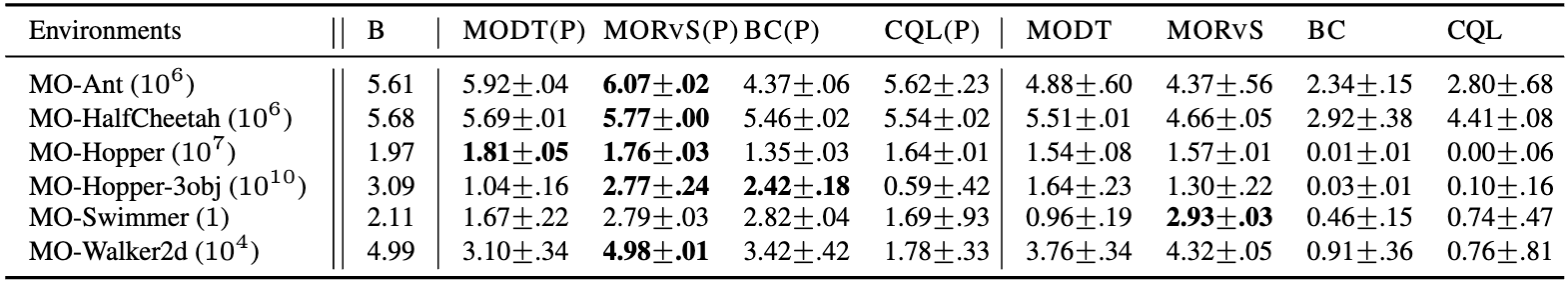

In the chart below, PEDA trained on Amateur dataset outperforms the Amateur behavioral policy mesaured by Hypervolume, marking the success of learning.

On the other hand, PEDA variants closely follow the conditioned return-to-go for each objective under all preferences.

Demos

The Cheetah on the left prioritizes running fast while the Cheetah on the right prioritizes saving energy.

–

The Ant on the left prioritizes running horizontally while the Ant on the right prioritizes running vertically.

External Links

Paper: https://openreview.net/forum?id=Ki4ocDm364

Github: https://github.com/baitingzbt/PEDA

Poster: https://drive.google.com/file/d/1kiUYbYcfAdd8wLLK7x26NSYCqfWk6mGr/view

Cite:

@inproceedings{

zhu2023paretoefficient,

title = {Scaling Pareto-Efficient Decision Making via Offline Multi-Objective {RL}},

author = {Baiting Zhu and Meihua Dang and Aditya Grover},

booktitle = {International Conference on Learning Representations},

year = {2023},

url = {https://openreview.net/forum?id=Ki4ocDm364}

}